IPsec routing has a reputation for being unwieldy. This isn’t entirely undeserved. Among the two main ways IPsec tunnels are configured, policy-based IPsec configurations are especially bad at this. They completely eschew routing via a standard routing table, making packet flow harder to troubleshoot and adding excessive administrative overhead. Unfortunately, certain vendors don’t allow any other type. Even among vendors that support the other method of route-based tunnels, it’s not always smooth sailing.

Let’s take a look at the most common IPsec routing scheme, bare IPsec, using phase 2 selectors as routing rules. Phase 2 selectors are declarations by the devices on both sides of the tunnel indicating what traffic is allowed through. If they don’t match, the tunnel doesn’t come up.

For example, device A sits at the border of network 10.100.100.0/24 with its own internal IP address, 10.100.100.1. Device B’s internal side sits at 10.200.200.1/24. To keep things simple, the phase 2 selectors are set as 10.100.100.0/24 and 10.200.200.0/24. Simple enough. Sort of.

From a network engineer’s point of view, this is an uncomfortable proposition. Where exactly does 10.200.200.0/24 sit in relation to 10.100.100.0/24? Normally, there’d be some sort of link between them (usually a point-to-point link with IPs on both ends that a routing table could point to). Since this particular IPsec tunnel doesn’t contain any kind of logical link with IPs on the ends, the routing table simply has an entry for 10.200.200.0/24 as “over on the other side of the IPsec tunnel.”

This works, but isn’t very clean. Worse is when a policy-based IPsec configuration is active; in which case there’s no routing table entry at all. The routing logic is instead derived from a policy that states “when a packet from 10.100.100.0/24 has a destination within 10.200.200.0/24, shove it through the encryption engine and down the IPsec tunnel, where it will be decrypted and forwarded on its way.” With a large number of routing policies active, this can quickly turn into a game of “find the policy that governed the packet flow,” which is no one’s favorite.

Complicating matters is the fact that some vendors don’t even care what you put in the phase 2 selector, and instead rely on routing table entries and policies for routing logic. The phase 2 selector is simply a config line that’s there to match with the other side, so there’s a good chance that it’s completely meaningless.

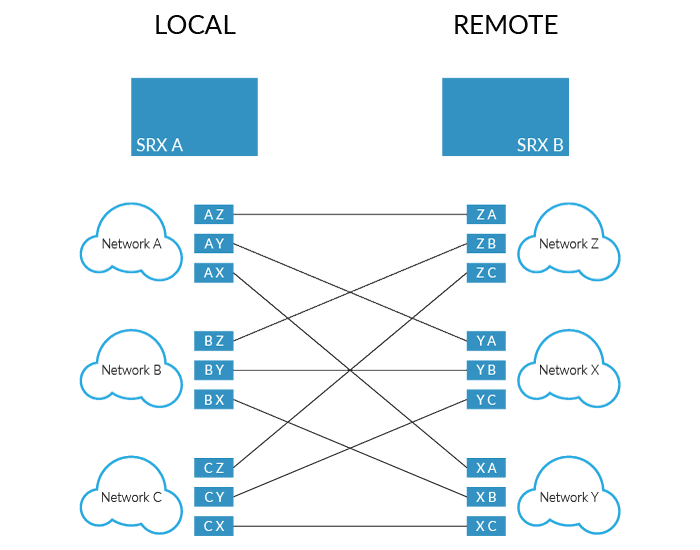

Finally, this is not a scalable configuration. What happens when we have multiple networks on each side? That’s right, we need a phase 2 selector for each possible combination. In our example with two networks, we need a phase 2 selector. Two networks on each side of the link bump that up to 8 selectors (4 selectors per side—each network needs a selector to each network). In the figure below, 3 non-contiguous networks on each side need 18 selectors—9 for each side! And this is if you’re lucky enough to have CIDR specified networks on each side. There are many times when the administrator in charge of the remote network just wants to allow individual IPs to traverse the tunnel. Can you imagine the number of selectors you’d need if they had a list of 10+ individual IPs?

It should be clear at this point that bare IPsec phase 2 selectors are a poor choice when it comes to routing logic. What we need instead is a standard “virtual” cross-connect, or something that acts like a point to point link. Not only that, but we need to separate routing logic from security and firewall logic instead of mashing them together in a horrible Frankenstein creation. Luckily, this kind of thing exists and is well supported across multiple vendors. I’m talking about Generic Routing Encapsulation, or GRE.

GRE is a relatively simple protocol that operates at a layer 3 of the OSI model. Its underlying transport is layer 3 (IP) and it accepts packets (IP, IPv6, multicast), and it presents itself as a layer 1 physical link with layer 2 (Ethernet) framing. What this means is that any protocol that can run on Ethernet should work fine over GRE, including useful routing protocols such as OSPF and BGP. And because it operates like a regular link, its behavior is predictable (unlike a bare IPsec tunnel, which sits in a gray area between a logical link and a policy route). GRE tunnels are completely stateless, making them very useful in situations where the underlying transport may or may not be available at any one time.

How does this help with IPsec?

By configuring a GRE tunnel between the endpoints of the IPsec tunnel, we can ignore all the odd constraints of routing over IPsec and simply deal with a bog standard GRE link. In addition, we can treat the GRE link as a standard security plane and clearly define policies that allow traffic in and out, as opposed to a security policy that’s also handling routing, leading to an extremely confusing config. Our previous example with the 18 phase-2 selectors configured to handle full mesh network routing can be replaced with two selectors over a GRE link—one on each side. Routing is handled via traditional static or dynamic routing tools. If we ever need to add or remove networks from each side of the link, we simply add or remove routing entries. If you’re using dynamic routing, you don’t even have to do that, as the routing protocols do it for you. Compared to the IPsec method of maintaining matching, phase-2 selectors where you have to add/remove an exponentially increasing number of selectors as networks change, this is a huge win in terms of time savings and reducing configuration complexity.

Let’s take a look at an actual example.

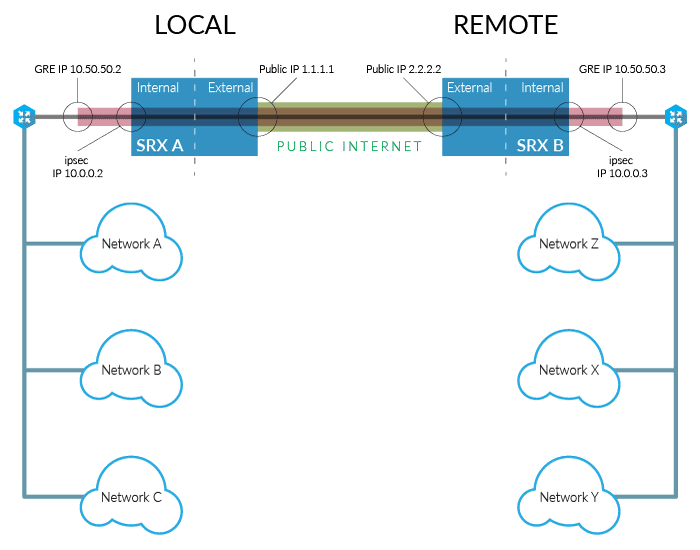

Here we have two IPsec endpoints that can reach each other over the internet using their public IPs, 1.1.1.1 and 2.2.2.2. If you recognize these example IPs, it’s because we use a lot of Juniper equipment here at ServerCentral. Behind 1.1.1.1 (we’ll call this the local network) are 3 subnets: 10.100.100.10.0/24, 10.100.100.0/24, and 10.100.111.0/24. Behind 2.2.2.2 (we’ll call this the remote network) are 3 more subnets: 10.200.20.0/24, 10.200.200.0/24, and 10.200.222.0/24. We’d like for hosts on any of the local networks to reach any of the hosts in any of the remote networks and vice versa. Rather than take the arduous position of routing via 18 phase 2 selectors, we’ll use one set of phase 2 selectors plus a GRE tunnel to make future expansion trivial to scale up or down.

The first thing we’ll do is create a bare IPsec tunnel for the GRE tunnel to sit on top of. This is the only thing we’ll need a phase 2 selector for, and the IPs we assign to the endpoints are an unroutable island unto itself. These are only needed to provide the external endpoints for our GRE tunnel. In this example, we’ll use 10.0.0.2/31 for the local network, and 10.0.0.3/31 will be utilized on the remote network.

Once the IPsec tunnel is up, 10.0.0.2 and 10.0.0.3 should be able to ping each other, confirming that we have a secure link between 1.1.1.1 and 2.2.2.2.

Now we set up the GRE tunnel. A GRE tunnel is configured with a minimum of 4 parameters. The first two parameters are the source and destination IPs of the tunnel. In this case, they are the IPsec endpoints of 10.0.0.2 and 10.0.0.3. The third parameter is the actual IP of GRE tunnel itself on the local side. In our example, we use 10.50.50.2/31. The final parameter is the far side IP of the GRE tunnel on the remote side. Here we use 10.50.50.3/31.

Here we again perform a test, this time for GRE connectivity. 10.50.50.2 should be able to ping 10.50.50.3 and vice versa.

Finally, we configure routing. If we’re using a dynamic protocol like OSPF, we simply configure it to advertise and accept routes from the far side of the tunnel. If you’re more traditional and prefer static routes, you would add routes to all the remote subnets via 10.50.50.3, and on the remote side, add all routes to the local networks via 10.50.50.2. Provided your security policies are set up correctly to allow traffic to flow properly between all those networks, you’re done.

You have a full mesh network without the complexity of a multitude of phase-2 IPsec selectors. As you add or remove networks, you just add or remove static routes as necessary (and, as mentioned before, this can be made even easier by fully automating routing with a dynamic routing protocol such as OSPF).

Let’s take a step back and look at why this works with a packet flow example:

- Host 10.100.100.100 would like to send an HTTP syn packet to 10.200.200.200, so it sends it off to its router.

- The router knows 10.200.200.200 is available via an IP at 10.50.50.3 (reachable via 10.50.50.2), so it forwards it on to that IP. This packet will traverse the GRE tunnel, colored black in the example.

- The IPsec device sees a packet come in to the tunnel with a destination of 10.50.50.3 and encapsulates that packet within another external packet. This external packet is an ESP packet via the IPsec tunnel itself, between 10.0.0.2 to 10.0.0.3. This is the actual IPsec encapsulation, shown as red in the example.

- The ESP packet has yet another encapsulation process, this time with the public IP of the local IPsec devices – 1.1.1.1. Its destination is the remote IPsec device – 2.2.2.2. The packet is encrypted and hashed for authentication at this point. In the example, this is the green colored section.

- The ESP packet traverses the internet, fully encrypted with an authentication header and arrives at 2.2.2.2.

- 2.2.2.2 now begins the reverse process of all the previous encapsulations. First, it authenticates and decrypts the ESP packet, thus stripping off the outer-most layer of encapsulation. It sees that this is a packet destined for the IPsec endpoint of 10.0.0.3, sourced from 10.0.0.2. The packet is forwarded onto 10.0.0.3.

- 0.0.3 sees the packet is a GRE packet and strips the GRE encapsulation off. It now sees the inner packet was sourced from 10.100.100.100 with a destination of 10.200.200.200.

- Consulting the routing table, it knows where that host is, and forwards the now-original packet onto the destination host.

And there you have it.

GRE is an efficient protocol and doesn’t use a lot of processing overhead as it encapsulates and de-encapsulates packets. On the other hand, the IPsec ESP encapsulation can be processor intensive, especially with a large amount of packets per second, which is why choosing the right encryption and authentication schemes is vital in maintaining a responsive network and high throughput.

On a final note, there are some vendors out there like Juniper who have come to their senses on IPsec routing and have implemented a way to get this done even cleaner, bypassing the whole GRE tunnel layered over IPsec. The configuration is similar, though a beyond the scope of this particular article. If you’re interested in discussing it, contact me.

It should be fairly clear why using standard routing tools is a far more scalable and efficient way to configure IPsec tunnels. Unfortunately, configuring tunnels this way is less common than brute forcing the matter with a slew of phase 2 selectors. To be honest, I’m not sure why this is, as any systems or network administrator worth his or her salt will always choose efficiency over chaos. I suspect much of it has to do with the way IPsec can sometimes come off as mysterious and arcane, and once it’s working, many wash their hands of it (until it breaks!). Fortunately, SCTG has wide experience with various vendor IPsec implementations over many years. While we have our favored IPsec vendor implementations (shout out to Juniper), we’ve worked with clients whose devices have ranged from DIY Linux endpoints to chassis-based security blades, and encourage IPsec tunnels to be deployed this way. With all that experience, we’re often asked why we implement our tunnels a certain way. Hopefully this series has shed some light on why we do it the way we do.